The 'one simple fact' about life that gave Steve Jobs the courage to change the world

The same spark can live in all of us.

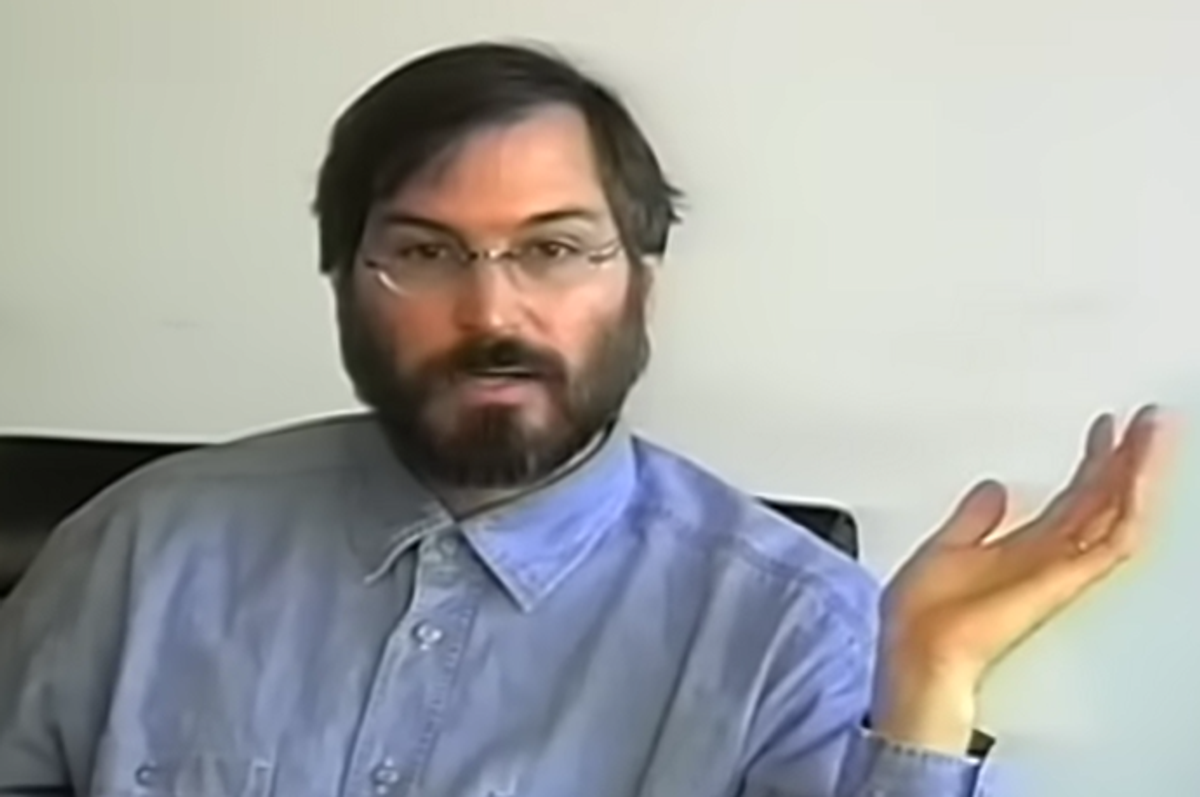

Steve Jobs speaks to the Santa Clara Valley Historical Association.

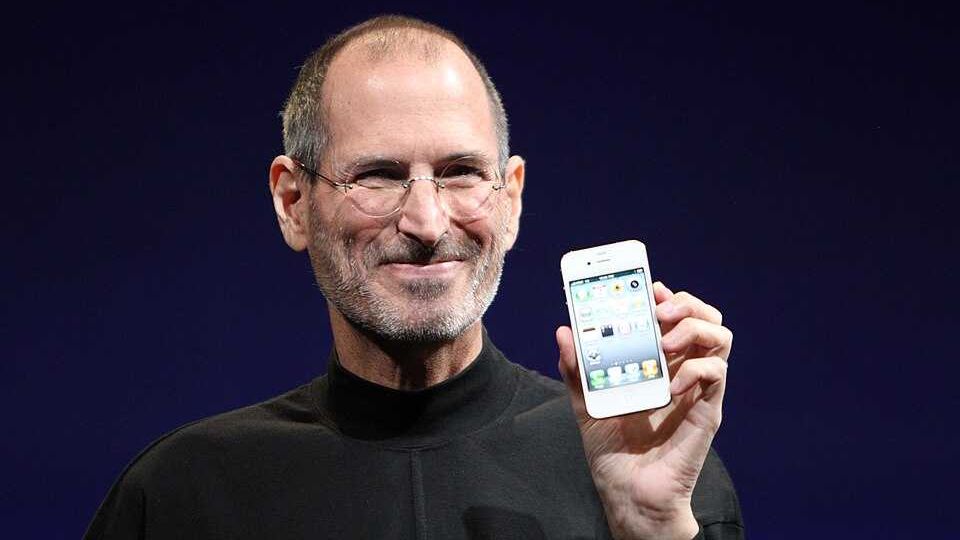

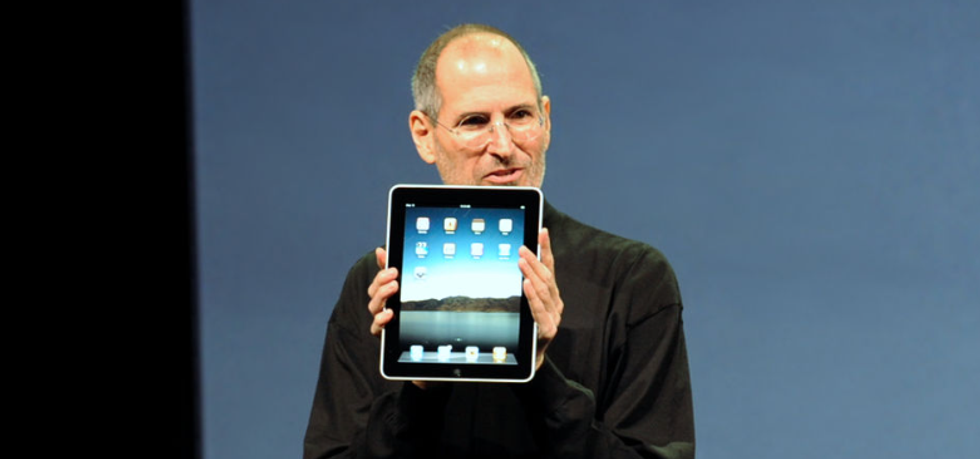

Steve Jobs was one of the greatest minds of our time because he could anticipate what people would love before they even knew it themselves. By blending art and technology, he helped create era-defining products like the iPhone, iPod, iPad, and Macintosh computer. He also helped guide Pixar to change how we see movies.

Jobs once described the epiphany that led him to embrace out-of-the-box thinking in a 1994 interview with the Santa Clara Valley Historical Association. The message was simple: you're just as smart as the people who created the parameters of the modern world, so break them and see what you can create.

- YouTube youtu.be

The realization that changed his life

In the interview, Jobs revealed:

"When you grow up, you tend to get told that the world is the way it is and your life is just to live your life inside the world, try not to bash into the walls too much, try to have a nice family, have fun, save a little money. That's a very limited life. Life can be much broader, once you discover one simple fact, and that is that everything around you that you call life was made up by people that were no smarter than you. And you can change it, you can influence it, you can build your own things that other people can use. Once you learn that, you'll never be the same again."

"The minute that you understand that you can poke life and actually something will, you know if you push in, something will pop out the other side, that you can change it, you can mold it," Jobs continued. "That's maybe the most important thing. It's to shake off this erroneous notion that life is there and you're just gonna live in it, versus embrace it, change it, improve it, make your mark upon it."

His advice applies to everyone

Jobs's realization is empowering because he argues that the people who came before us were no more special than we are today, and that we shouldn't live our lives constrained by their limitations. Traditions from years ago may no longer serve us, and pathways to success that once worked may not be as fruitful today. Nobody knows how to live your life but you.

He added that the average person has the intelligence to make big, significant changes that can improve the lives of many. In fact, with all the information and technology available today, individuals have far more tools than those who originally created the parameters by which we live.

"I think that's very important, and however you learn that, once you learn it, you'll want to change life and make it better, cause it's kind of messed up, in a lot of ways," Jobs said. "Once you learn that, you'll never be the same again."

The beautiful thing about this realization is that Jobs wasn't trying to gatekeep being a changemaker but instead invited everyone to the party. His breakthrough was an admission that the world is never finished; it is only a rough draft that we can either keep perfecting or throw away and start something completely different.

Look around, what do you think we can improve that no one else has considered? That's how you start thinking like Steve Jobs, and after we lost him in 2011, it's clear we could use more people who see the world the way he did.