Woman’s experience scheduling an EEG highlights the unconscious bias of textured hair

Though her scalp was exposed for the procedure, they still insisted she take her twists out, making it harder to get to her scalp.

Woman can't schedule EEG due to unconscious textured hair bias.

Getting a medical procedure done can be scary, or at the very least nerve-wracking, no matter how many times you've had it done. It's something that's outside of your normal routine and you're essentially at the mercy of the medical facility and providers. Most of the time, the pre-procedure instructions make sense, and if something catches you by surprise, it's usually easily explained.

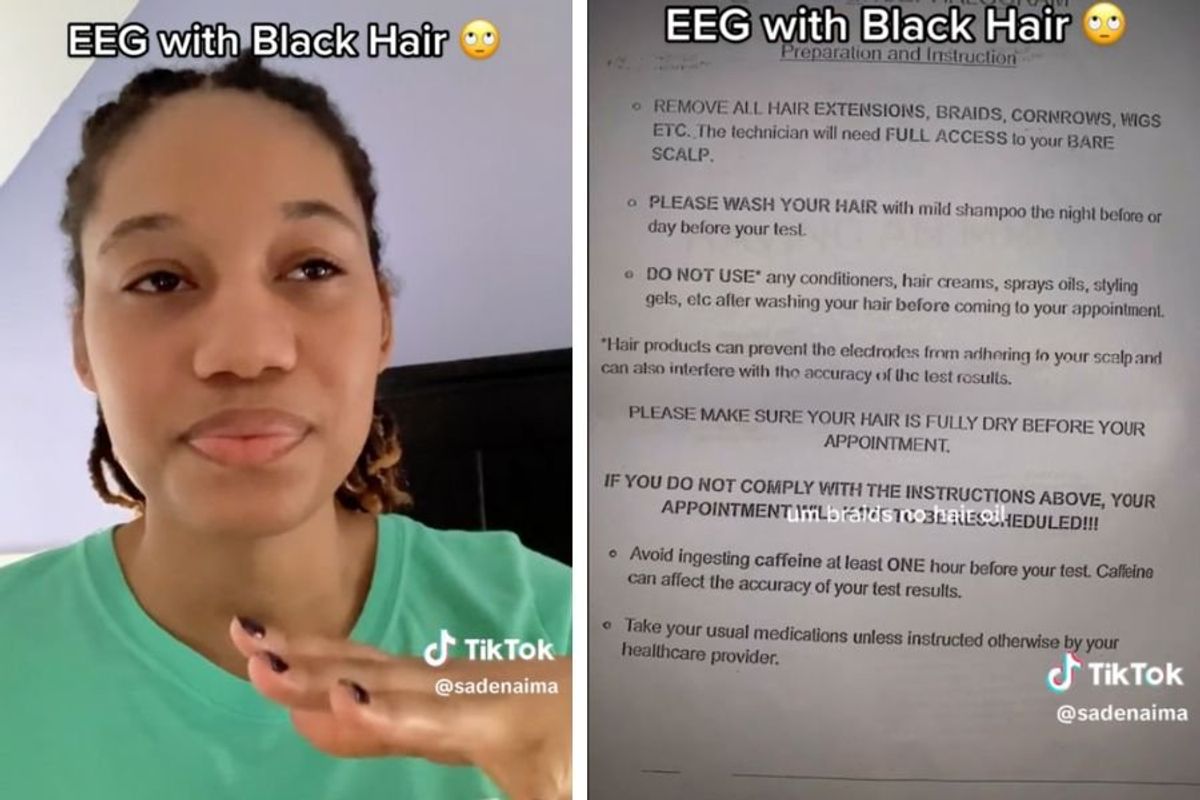

Sadé Naima recently had an experience while attempting to get an EEG that wasn't easily explained away. In fact, the entire situation didn't make sense to the TikTok creator who experiences migraines. Naima uploaded a video to the social media platform explaining the sequence of events that happened after her doctor referred her to receive an MRI and EEG.

An MRI uses a magnetic field to generate images and an EEG uses electrodes that stick to your scalp to create images of your brain waves.

Since Naima was having consistent migraines, it seemed like a medical necessity to have these tests done to make sure nothing more serious was going on. So imagine the patient's surprise when the pre-procedure paperwork for the EEG mentioned that her hair had to be loose, which at first glance may seem harmless and inclusive of everyone. But, kinky textured hair does not have the same effect as straight hair when it's "loose."

"I received a document saying to prepare for the EEG—I can't have weave, braids, no hair oil, no conditioner, like nothing in your hair," Naima explains. "And how as a Black woman that is so exclusionary for coarse and thick hair. To literally have no product in your hair and show up with it loose, you're not even reaching my scalp with that."

@sadenaima update: someone else from the medical center called me & suggested i show my hair to/talk with the technician so tbd i guess ... It's 2023, this makes no sense that the technology isn't inclusive or that the practioners aren't educated / prepared for diverse experiences. Not too much on the appearance 😂 #migraine #eeg #eegblackhair #blackhair #nyc #neurology #racialbiasinmedicine #racialbias #fyp #foryoupage

When kinky textured hair is "loose" without product, this generally means the hair is in an afro, which makes the scalp extremely difficult to get to without the use of a tool to part it and hold it out of the way. Naima called the facility for clarification and explained that her hair is currently in twists with her scalp exposed. She assured the woman on the other end that she would make sure her hair was clean and free of product, but that it would be easier for everyone involved if her hair remained in twists.

Naima went as far as to send an email with multiple pictures of her hair showing that her scalp was indeed easily accessible with her protective style in place. But the unnamed woman told her that it wasn't possible for the EEG to be completed if Naima's hair was in twists. This prompted the question, "What about people with locs?" to which the person told her they also wouldn't be able to get the procedure.

@sadenaima I sent out an email to the center & their HR and will see where that takes me to start! Thank you everyone! 🧡 i love that this has been able to help & inspire people. #racialbias #medicalracialbias #minitwists #migraine #eegblackhair #eegnaturalhair #neurology #locs #eeg #fyp

The frustrated patient searched the internet looking for the best way to have an EEG with kinky textured hair when she came across information written by a Black doctor who was also trying to find an answer. Currently, there doesn't seem to be much information on how to appropriately give an EEG if the patient has textured hair, though many protective styles provide the direct access to the scalp needed for the procedure.

So, while policies like these aren't meant to be discriminatory, it's clear that they may cause some unintended problems. In the end, Naima's EEG was rescheduled, but after speaking to the technician that completes the procedure, she was assured her hair would not be an issue. Hopefully, the results of her EEG are favorable and she has a much more pleasant experience when preparing for the procedure.

- A study on doctors treating patients differently is a reminder for all of us about implicit bias. ›

- The way some reporters are talking about Ukraine is revealing their implicit bias ›

- Look at how differently a Mississippi newspaper covered stories about Black and White suspects ›

- Vietnamese locals fascinated by Black woman's hair - Upworthy ›

- Woman reacts to hospital providing ethnic hair products - Upworthy ›

- Mom calls on doctors to do better for little girls of color - Upworthy ›

- Woman's viral music video shows how doctors don't understand the female body - Upworthy ›