A factual Google search about Maria Von Trapp shows why its 'AI overview' can't be trusted

It wasn't just inaccurate. It was flat-out false.

Either Maria Von Trapp was a medical marvel, or Google's overview was wrong.

When Google launched its "AI Overview" in the spring of 2024 with messaging like "Generative AI in Search: Let Google do the searching for you" and "Find what you're looking for faster and easier with AI overviews in search results," it seemed quite promising. Instead of having to filter through pages of search results yourself, the expectation was that AI would parse through the relevant results for you and synopsize the answer to whatever question you asked.

That sounded like a great time and energy saver. But unfortunately, artificial intelligence isn't actually intelligent, and the AI overview synopsis is too often entirely wrong. We're not talking just a little misleading or inaccurate, but blatantly, factually false. Let me show you an example.

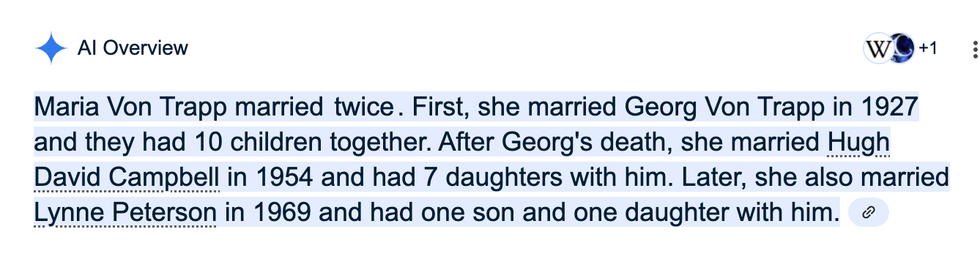

I was writing an article about the real-life love story between Maria and Georg Von Trapp, and as part of my research, I found out Georg died 20 years after they got married. I hadn't come across anything about Maria remarrying after Georg's death, so I Googled whether she had. Here's what the AI Overview said when I searched:

"Maria Von Trapp married twice. First, she married Georg Von Trapp in 1927 and they had 10 children together. After Georg's death, she married Hugh David Campbell in 1954 and had 7 daughters with him. Later, she also married Lynne Peterson in 1969 and had one son and daughter with him."

Something about that answer didn't add up—and it wasn't just that she'd supposedly married twice but had three spouses. Maria Von Trapp was born in 1905, so according to this AI Overview, she'd remarried at 49 years old and then had seven more children, and then married again at 64 years old and had another two children. So she had 19 children in total? And she had nine of them in her 50s and 60s? That seemed…unlikely.

I clicked the source link on the AI Overview, which took me to the Maria Von Trapp Wikipedia page. On that page, I found a chart where the extra two spouses' names were listed—but they very clearly weren't her spouses. Hugh David Campbell was the husband of one of Maria's daughters. And Lynne Peterson was the wife of one of her sons.

The truth is that Maria never remarried after Georg died. If I had believed the AI Overview, I would have gotten it this very basic fact about her life completely wrong. And it's not like the overview pulled that information from a source that got it wrong. Wikipedia had it right. The AI Overview itself extrapolated the information incorrectly.

But the funniest part of all of this is that when I repeated the Google search "Did Maria Von Trapp remarry after Georg died?" while writing this article to see if the same result came back, the AI Overview got it right, citing the very Upworthy article I had written.

This may seem like a lot of fuss over something inconsequential in the big picture, but Maria Von Trapp's marital status is not the only wrong result I've seen in Google's AI Overview. I once searched for the cast of a specific movie and the AI Overview included a famous actor's name that I knew for 100% certain was not in the film. I've asked it for quotes about certain subjects and found quotes that were completely made up.

Are these world-changing questions? No. Does that matter? No. What matters are facts and people assuming the Google overview is correct when it might be egregiously wrong.

Objective facts are objective facts. If the AI Overview so egregiously messes up the facts about something that's easily verifiable, how can it be relied on for anything else? Since its launch, Google has had to fix major errors, like when it responded to the query "How many Muslim presidents has the U.S. had?" with the very wrong answer that Barack Obama had been our first Muslim president. As of November of 2025, it's calling the latest "Call of Duty" iteration a fake game, when it's very much real.

Some people have "tricked" Google's AI into giving ridiculous answers by simply asking it ridiculous questions, like "How many rocks should I eat?" but that's a much smaller part of the problem. Most of us have come to rely on basic, normal, run-of-the-mill searches on Google for all kinds of information. Google is, by far, the most used search engine, with 79% of the search engine market share worldwide as of March 2025. The most relied upon search tool should have reliable search results, don't you think?

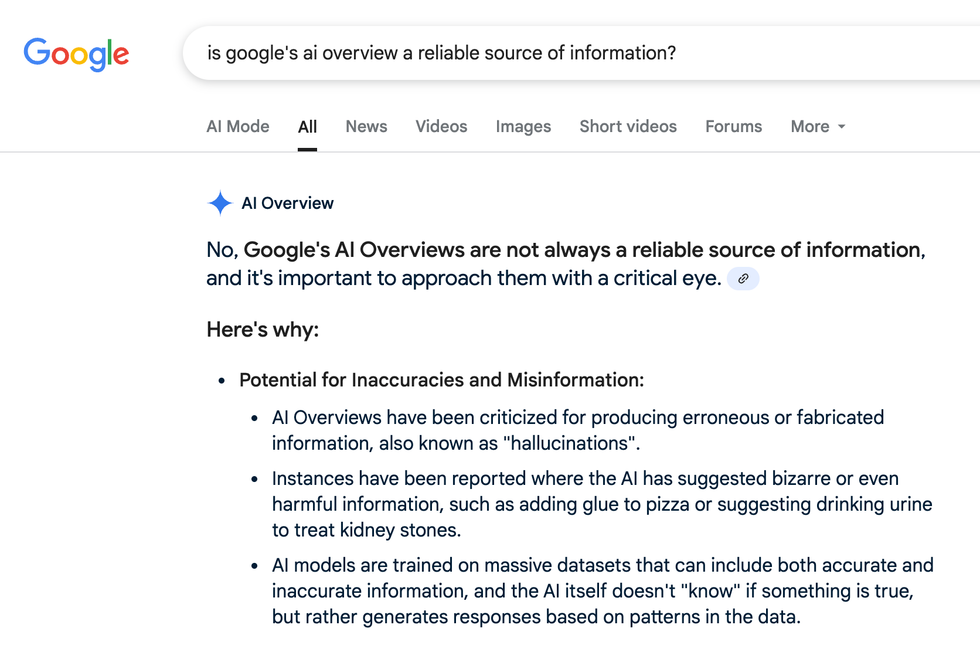

Even the Google AI Overview itself says it's not reliable:

As much as I appreciate how useful Google's search engine has been over the years, launching an AI feature that sometimes makes things up and puts them them at the top of people's search results feels incredibly irresponsible. And the fact that it still spits out completely (yet unpredictably) false results about objectively factual information over a year after its launch is unforgivable, in my opinion.

We're living in an era where people are divided not only by political ideologies but by our very perceptions of reality. Misinformation has been weaponized more and more over the past decade, and as a result, we often can't even agree on the basic facts much less complex ideas. As the public's trust in expertise, institutions, legacy media, and fact-checking has dwindled, people have turned to alternative sources to get information. Unfortunately, those sources come with varying levels of bias and reliability, and our society and democracy are suffering because of it. Having Google spitting out false search results at random is not helpful on that front. At all.

- YouTube www.youtube.com

AI has its place, but this isn't it. My fear is that far too many people assume the AI Overview is correct without double-checking its sources. And if people have to double-check it anyway, the thing is of no real use—just have Google give links to the sources like they used to and end this bizarre experiment with technology that simply isn't ready for its intended use.

This article originally appeared in June.

A Generation Jones teenager poses in her room.Image via Wikmedia Commons

A Generation Jones teenager poses in her room.Image via Wikmedia Commons

An office kitchen.via

An office kitchen.via  An angry man eating spaghetti.via

An angry man eating spaghetti.via

An Irish woman went to the doctor for a routine eye exam. She left with bright neon green eyes.

It's not easy seeing green.

Did she get superpowers?

Going to the eye doctor can be a hassle and a pain. It's not just the routine issues and inconveniences that come along when making a doctor appointment, but sometimes the various devices being used to check your eyes' health feel invasive and uncomfortable. But at least at the end of the appointment, most of us don't look like we're turning into The Incredible Hulk. That wasn't the case for one Irish woman.

Photographer Margerita B. Wargola was just going in for a routine eye exam at the hospital but ended up leaving with her eyes a shocking, bright neon green.

At the doctor's office, the nurse practitioner was prepping Wargola for a test with a machine that Wargola had experienced before. Before the test started, Wargola presumed the nurse had dropped some saline into her eyes, as they were feeling dry. After she blinked, everything went yellow.

Wargola and the nurse initially panicked. Neither knew what was going on as Wargola suddenly had yellow vision and radioactive-looking green eyes. After the initial shock, both realized the issue: the nurse forgot to ask Wargola to remove her contact lenses before putting contrast drops in her eyes for the exam. Wargola and the nurse quickly removed the lenses from her eyes and washed them thoroughly with saline. Fortunately, Wargola's eyes were unharmed. Unfortunately, her contacts were permanently stained and she didn't bring a spare pair.

- YouTube youtube.com

Since she has poor vision, Wargola was forced to drive herself home after the eye exam wearing the neon-green contact lenses that make her look like a member of the Green Lantern Corps. She couldn't help but laugh at her predicament and recorded a video explaining it all on social media. Since then, her video has sparked a couple Reddit threads and collected a bunch of comments on Instagram:

“But the REAL question is: do you now have X-Ray vision?”

“You can just say you're a superhero.”

“I would make a few stops on the way home just to freak some people out!”

“I would have lived it up! Grab a coffee, do grocery shopping, walk around a shopping center.”

“This one would pair well with that girl who ate something with turmeric with her invisalign on and walked around Paris smiling at people with seemingly BRIGHT YELLOW TEETH.”

“I would save those for fancy special occasions! WOW!”

“Every time I'd stop I'd turn slowly and stare at the person in the car next to me.”

“Keep them. Tell people what to do. They’ll do your bidding.”

In a follow-up Instagram video, Wargola showed her followers that she was safe at home with normal eyes, showing that the damaged contact lenses were so stained that they turned the saline solution in her contacts case into a bright Gatorade yellow. She wasn't mad at the nurse and, in fact, plans on keeping the lenses to wear on St. Patrick's Day or some other special occasion.

While no harm was done and a good laugh was had, it's still best for doctors, nurses, and patients alike to double-check and ask or tell if contact lenses are being worn before each eye test. If not, there might be more than ultra-green eyes to worry about.